Abstract

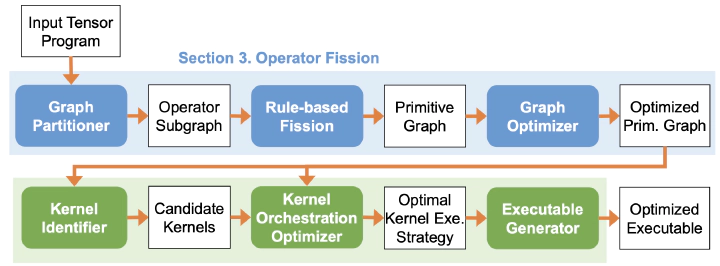

Kernel orchestration is the task of mapping the computation defined in different operators of a deep neural network (DNN) to the execution of GPU kernels on modern hardware platforms. Prior approaches greedily perform operator fusion, which fuses the computation of multiple operators into a single GPU kernel, and misses a variety of optimization opportunities in kernel orchestration. This paper presents Korch, a tensor program optimizer that discovers optimal kernel orchestration strategies for tensor programs. Instead of directly fusing operators, Korch first applies operator fission to decompose operators into a small set of basic tensor algebra primitives, which enables fine-grained, inter-operator optimizations. Korch formalizes kernel orchestration as a constrained optimization problem, leverages an off-the-shelf binary linear programming solver to discover an optimal orchestration strategy, and generates an executable that can be directly deployed on modern GPU platforms. Evaluation on a variety of DNNs show that Korch outperforms existing tensor program optimizers by up to 1.7× on V100 GPUs and 1.6× on A100 GPUs.